Augmented Reality

Augmented Reality (AR) turns the environment around into digital platform placing virtual objects in the real world, in real-time. AR fits into a variety of uses including gaming, digital retail, navigation, design.

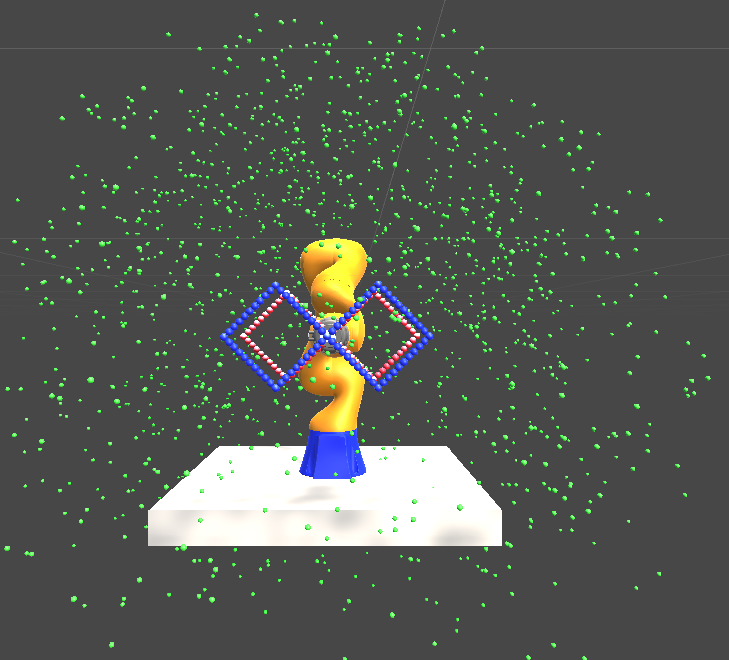

Our research group is utilizing AR to estimate the “safety aura” around the robot which can contribute to make the interaction between the human and robot more safer. The application developed in ARMS laboratory is used for generating an AR environment to augment virtual information about safety into the view of the user during collaborative work with the Variable Stiffness Actuated (VSA) robots. Virtual objects of safe/danger zones are generated based on the features identified within the real-world environment in the work-space of the robot.

All Publications

Safety Aura Visualization for Variable Impedance Actuated Robots.

#No #keywords

Makhateva, Z.; Zhakatayev, A.; and Varol, H. A.Jan 2019. In 2019 IEEE/SICE International Symposium on System Integration (SII)